Startup

It Happened Again.

I’ve been seeing a lot of ultra technical posts fly past my news feed lately and I’m tired of it. There’s too much information out there, too many analyses of vague hardware capabilities, too much handwaving in the direction of compiler internals.

It’s too much.

Take it out. I know you’ve got it with you. I know all my readers carry them at all times.

That’s right.

It’s time to make some pasta.

Everyone understands pasta.

Target Locked

Today I’ll be firing up the pasta maker on this ticket that someone nerdsniped me with. This is the sort of simple problem that any of us smoothbrains can understand: app too slow.

Here at SGC, we’re all experts at solving app too slow by now, so let’s take a gander at the problem area.

I’m in a hurry to get to the gym today, so I’ll skip over some of the less interesting parts of my analysis. Instead, let’s look at some artisanal graphics.

This is an image, but let’s pretend it’s a graph of the time between when an app is started to when it displays its first frame:

At the start is when the user launched the app, the body of the arrow is what happens during “startup”, and the head of the arrow is when the app has displayed its first frame to the user. The “startup” period is what the user perceives as latency. More technical blogs would break down here into discussions and navel-gazing about “time to first light” and “photon velocity” or whatever, but we’re keeping things simple. If SwapBuffers is called, the app has displayed its frame.

Where are we at with this now?

Initial Findings

I did my testing on an Intel Icelake CPU/GPU because I’m lazy. Also because the original ticket was for Intel systems. Also because deal with it, this isn’t an AMD blog.

The best way to time this is to:

- add an

exitcall at the end ofSwapBuffers - run the app in a

whileloop usingtime - evaluate the results

On iris, the average startup time for gtk4-demo was between 190-200ms.

On zink, the average startup time was between 350-370ms.

Uh-oh.

More Graphics (The Fun Kind)

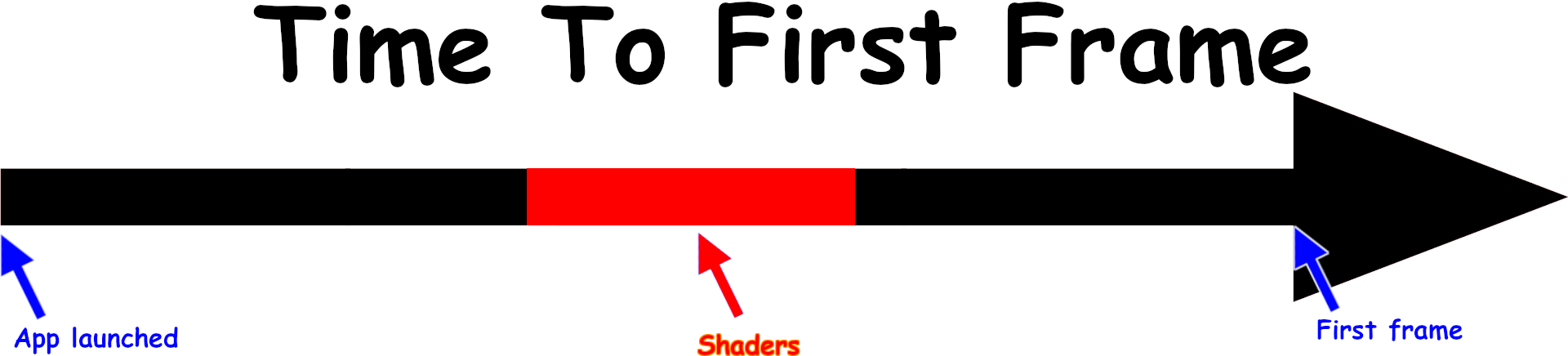

Initial analysis revealed something very stupid for the zink case: a lot of time was being spent on shaders.

Now, I’m not saying a lot of time was spent compiling shaders. That would be smart. Shaders have to be compiled, and it’s not like that can be skipped or anything. A cold run of this app that compiles shaders takes upwards of 1.0 seconds on any driver, and I’m not looking to improve that case since it’s rare. And hard. And also I gotta save some work for other people who want to make good blog posts.

The problem here is that when creating shaders, zink blocks while it does some initial shader rewrites and optimizations. This is like if you’re going to make yourself a sandwich, before you put smoked brisket on the bread you have to first slice the bread so it’s ready when you want to put the brisket on it. Sure, you could slice it after you’ve assembled your pile of pulled pork and slaw, but generally you slice the bread, you leave the bread sitting somewhere while you find/make/assemble the burnt ends for your sandwich, and then you finish making your sandwich. Compiling shaders is basically the same as making a sandwich.

But slicing bread takes time. And when you’re slicing the bread, you’re not doing anything else. You can’t. You’re holding a knife and a loaf of bread. You’re physically incapable of doing anything else until you finish slicing.

Similarly, zink can’t do anything else while it’s doing that shader creation. It’s sitting there creating the shaders. And while it’s doing that, the rest of the app (or just the main GL thread if glthread is active) is blocked. It can’t do anything else. It’s waiting on zink to finish, and it cannot make forward progress until the shader creation has completed.

Now this process happens dozens or hundreds of times during app startup, and every time it happens, the app blocks. Its own initialization routines–reading configuration data, setting up global structs and signal handlers, making display server connections, etc–cannot proceed until GL stops blocking.

If you’re unsure where I’m going with this, it’s a bad thing that zink is slicing all this bread while the app is trying to make sandwiches.

Improvement

The year is whatever year you’re reading this, and in that year we have very powerful CPUs. CPUs so powerful that you can do lots of things at once. Instead of having only two hands to hold the bread and slice it, you have your own hands and then the hands of another 10+ of your clones which are also able to hold bread and slice it. So if you tell one of those clones “slice some bread for me”, you can do other stuff and come back to some nicely sliced bread. When exactly that bread arrives is another issue depensynchronizationding on how well you understand the joke here.

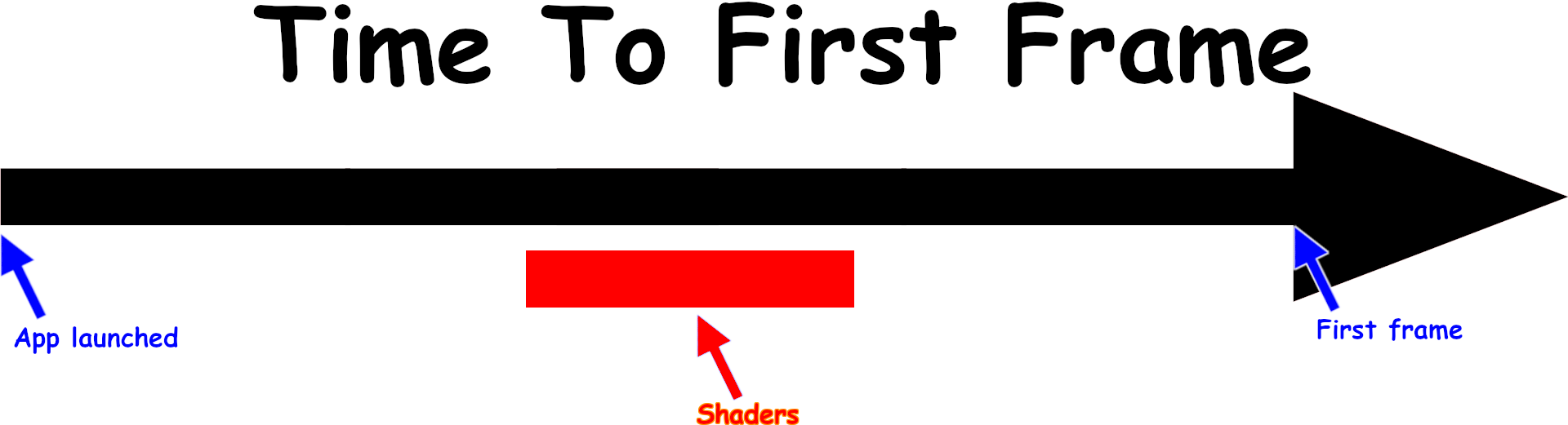

But this is me, so I get all the jokes, and that means I can do something like this:

By moving all that bread slicing into a thread, the rest of the startup operations can proceed without blocking. This frees up the app to continue with its own lengthy startup routines.

After the change, zink starts up in a average of 260-280ms, a 25% improvement.

I know not everyone wants pasta on their sandwiches, but that’s where we ended up today.

Not The End

That changeset is the end of this post, but it’s not the end of my investigation. There’s still mysteries to uncover here.

Like why the farfalle is this app calling glXInitialize and eglInitialize?

Can zink get closer to iris’s startup time?

We’ll find out in a future installment of Who Wants To Eat Lunch?